Subatomic units

The atomic unit of the internet to date has been the "post": A blob of media and some metadata. All kinds of things change — from the structure of networks to the metaphors we use — when those blobs become inspectable.

March 14, 2016

I like to think about consumer internet in terms of atomic units. Google was about that lone spare box, the native interaction model for seeking information on the web. Twitter’s short burst of text was the right atomic unit for the thought, Instagram’s simple photo for a glossy slice of life, Snapchat's timebombs for the things you're seeing in the world that don't need to persist. And of course all the messengers' what-I-type-in-chat-bubbles-on-the-right-and-you-in-chat-bubbles-on-the-left for basic communication.

This is highly reductive, of course, and you could object that Facebook was never really about an atomic unit, at least in form if not function. You might then reduce it further (this blog post was written on #ReductiveMonday) and say that the atomic unit of all internet services, consumer or otherwise, was the post. You could define a "post" as a set of metadata — i.e., the user who posted, a timestamp of when they posted, and who they posted to — alongside some blob of media: a photo, a string of text, a video, an audio clip.

The formula for "the post": Metadata + Blob

The formula for "the post": Metadata + Blob

Looking back, you could also say the data model for posts has primarily been oriented around the metadata for each post, and less so the blob of media—the actual data—that comprises it. When I post a Kanye West video to Facebook and @-mention my friend in it. Facebook could reasonably glean from their own metadata (<posted-by>Jonathan Libov</posted-by>) that I have an ongoing relationship with this person (<mentioned>Jane Doe</mentioned>). Facebook could also glean from the YouTube metadata, which has "Kanye West" listed explicitly in the title, that we both have strong feelings about Kanye West.

Likewise, Google's PageRank was initially oriented around the metadata of a webpage — which pages were linked to which other pages — than the content of the page itself.

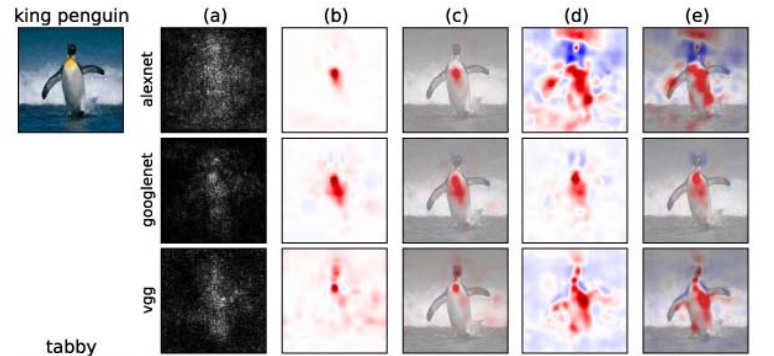

Of course Google and Facebook and others eventually got very good at analyzing text to make insights—sentiment analysis, information extraction and so forth. More recently, we've seen remarkable advances in machine vision, speech to meaning, and other forms of machine intelligence. This means that Google and Facebook (as well as startups like Clarifai) can now see photos and videos, Diffbot can see webpages the way humans do (using implicit visual signals in addition to explicit data signals to extract meaning) and products like Siri, Google Now, api.ai, and Houndify can hear audio and have some idea what they're all about.

Suddenly all those bits that comprise the blobs — blobs of photos and video and audio — we're all posting to the internet are now inspectable. As a crude illustration, you could say that images on the internet have historically looked like this:

And moving forward will look a lot more like this:

It's as if we were living in a world of atoms and just discovered that there are quarks.

This is much more meaningful than what it implies for Facebook and Google understanding every aspect of every thing we post. In a world of subatomic units on the internet, many of the metaphors we've built up over centuries no longer make sense. A photo, for example, has always been a rectangle with fine-grained hues and tints that in and of themselves mean nothing. But in a world where objects can be lifted from photos, maybe it will soon make more sense to think of a photo as a collection of (networked?) objects? Similarly, a song has always been a recorded audio track that gets replicated in its original form, but when something like Jukedeck can adjust the length of a song without changing its basic integrity, or create and destroy a song with no sentimentality, one wonders how long the notion of a song as a discreet, recorded audio track will remain fully intact.

Seeing the internet (Source)

Seeing the internet (Source)

Much as physics appears to have gotten quite a bit more complicated since we acquired an understanding of subatomic particles, I suppose our notion of all media may become quite a bit more complex over the coming years. We think of photos as natural, impenetrable blobs, videos as a series of impenetrable images paired with impenetrable audio tracks, and songs as layers of impenetrable words and music. But if you grow up with an internet that doesn't make those distinctions, those metaphorical blobs may no longer seem all that atomic or natural.

Moreover, if it’s dizzying to imagine how subatomic units — objects in photos and videos, words in audio, and ideas in text — will so vastly increase the volume of the world’s data points, it’s flat out stupefying to imagine the networks that will be built between them.