Vibecoding is skeuomorphic because code generation is slow

Why exactly do vibecoding apps stream their decision-making and code? Because 1/ They’re optimized for a temporary state of affairs where code generation isn’t instantaneous and 2/ Low-ish value customers are being over-served

The best analogy for vibecoding apps: AI models are the iPhone camera and one or more apps are Instagram. Everyone, including the creative but unskilled, will be ready to create things they never would have been able to create before.

I’m here to point out that while that may be largely true, we’re still stuck in an old, skeuomorphic paradigm when it comes to coding, such that the future doesn’t look merely like more of the present.

1. Technical people use no-code tools better than non-technical people

No-code tools promised something similar to the Instagram + iPhone camera bundle: You don’t need technical expertise to build internal tooling. The problem is that, at least in my experience, technical people are much better at using no-code tools than non-technical people are.

No-code saves technical people time deploying things internally more than it does empowering non-technical people to build on their own. This is selection bias: The types of people who are good at building tools now would have already found it irresistible to learn to code and understand how applications work. Similarly, the types of people who are good at making games now would have already learned Scratch, Phaser or Unity.

2. It’s revealing that vibecoding tools show so much code

Maybe vibecoding is the final realization of no code. In other words, UI’s for creating flows weren’t good enough; you need inference and conversational UI’s to extract the architecture from a no-coder’s head and the creativity and expertise of an LLM to construct the application.

I’d proffer a different idea consistent with the high degree of churn we see today in vibecoding and similar AI creation apps: There’s a lot of pent-up FOMO from people who didn’t get to participate in the upside of the app explosion nor make the money one could have made being an engineer over the last 15 years. Showing code being written and deployed in a chat is something of a painkiller for that FOMO. It elicits the feeling, I’m finally writing code, which is one half of the reason why vibecoding apps stream their output.

This is productivity muzak

3. Conversational UI’s might not be better but they are more comfortable

I’m not entirely sure where this obsession with conversational interfaces comes from. Perhaps it’s a type of anemoia, a nostalgia for a future we saw in StarTrek that never became reality. Or maybe it’s simply that people look at the term “natural language” and think “well, if it’s natural then it must be the logical end state”...I’m here to tell you that it’s not.

...Natural language is great for data transfer that requires high fidelity (or as a data storage mechanism for async communication), but whenever possible we switch to other modes of communication that are faster and more effortless. Speed and convenience always wins.

This relates to the selection bias point above: Vibecoding appeals to people who find it a very comfortable way to participate in the app economy. People genuinely interested in productivity are generally already technical, write informed, opinionated specs, and use command-line runtimes to leverage AI for productivity.

The main reason vibecoding UX is the way it is now

There’s yet another reason why vibecoding apps are conversational: They’re so slow that they need to entertain you while you wait. The stream of code is productivity muzak.

Were code generation instantaenous, it’s obvious that instead of using a conversational UI in a vibecoding app, you’d type something into Figma and edit it on a GUI-enriched canvas, similar to how you search for and then edit templates in Figma already. Why show code at all?

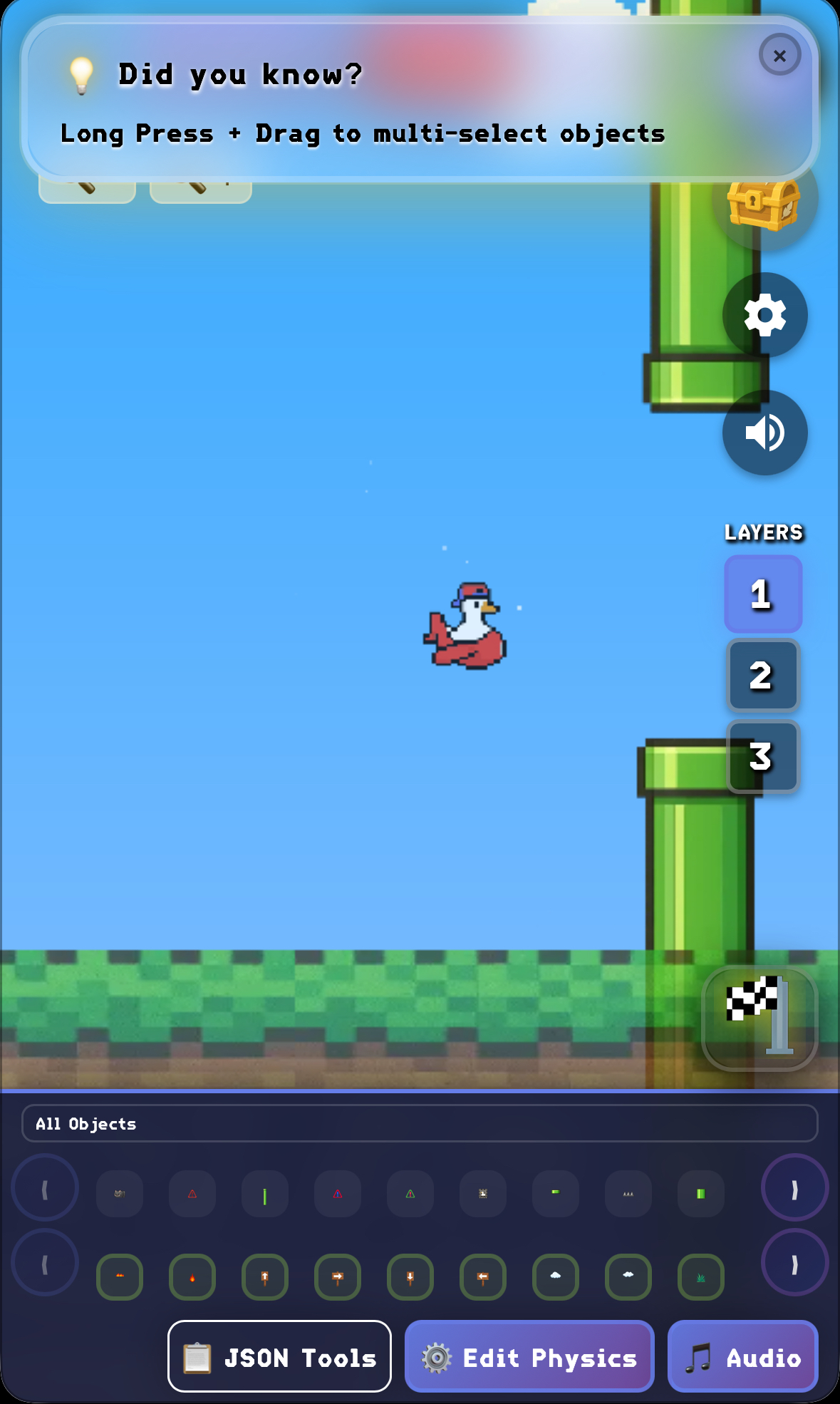

How to edit a game on a phone

How to edit a game on a phoneTo revisit the iPhone camera + Instagram analogy: the iPhone camera doesn’t stream the bytes it writes to a HEIC file, nor does Instagram stream the bytes it’s sending over the wire for the 750ms it might take to upload the photo. Professional photographers don’t care how their photos are written to disk, let alone want to watch it print on their screen.

Today's vibecoding apps streaming their Javascript outputs is too low level of an abstraction. Ask yourself, why does it stream Javascript and not the bytecode that Javascript compiles down to? Because the skeuomorphism elides the fact that vibe coding cycles take a minute.

One more skeuomorph

In this thought experiment where code generation is instantaneous, the term “app” doesn’t totally make sense for what’s exported from a vibecoding session. “Apps“ imply something freestanding and enduring, whereas the speed with which they’ll eventually be created contradicts the singularity of labor and love we’ve come to expect from apps.

We’re not far off the day when software will become indistinguishable from media or content, what we once called “multimedia”. Today we all produce a lot of photos and videos that you can’t interact with; in the future some portion of that stuff will be more clickable.

Addendum

If we're in a hype cycle of AI right now it’s because the super extraordinary investment in AI is outstripping the still extraordinary demand for generative AI. Exceptions: Prosumers are obviously consuming and paying for AI and Replit appears to benefit from a rapt audience of the young, quick twitch, and technically skilled.

Thanks

¯\_(ツ)_/¯